GPT Prompt

This module is supposed to be used inside the normal chat flow. It is used to generate a response based on a prompt template, not necessarily a user message. Inside the prompt template you can use LoyJoy variables as well as expressions. The response is generated by the GPT model based on the prompt and the system message. The output is stored in the variable gpt_output.

The prompt can be adapted to your needs by prompting techniques.

Use Cases

The GPT prompt module is very flexible can be used in various use cases. Here are some examples:

- Generate a JSON string to query an API based on user input

- Generate a SQL query based on user input

- Use the GPT model to make a decision based on user input

Response format

Normally, GPT models generate text responses. However, you can also force the GPT model to produce a JSON response. This is helpful if you want to extract structured information from the model response. To do this, you need to create mappings for the attributes you are interested in.

Imagine you prompt GPT to read an email to extract the sender and the subject. The prompt could look like this:

Dear Ladies and Gentlemen,

I am writing to you to inform you about the new product we have launched....

Cheers,

Sandra Smith

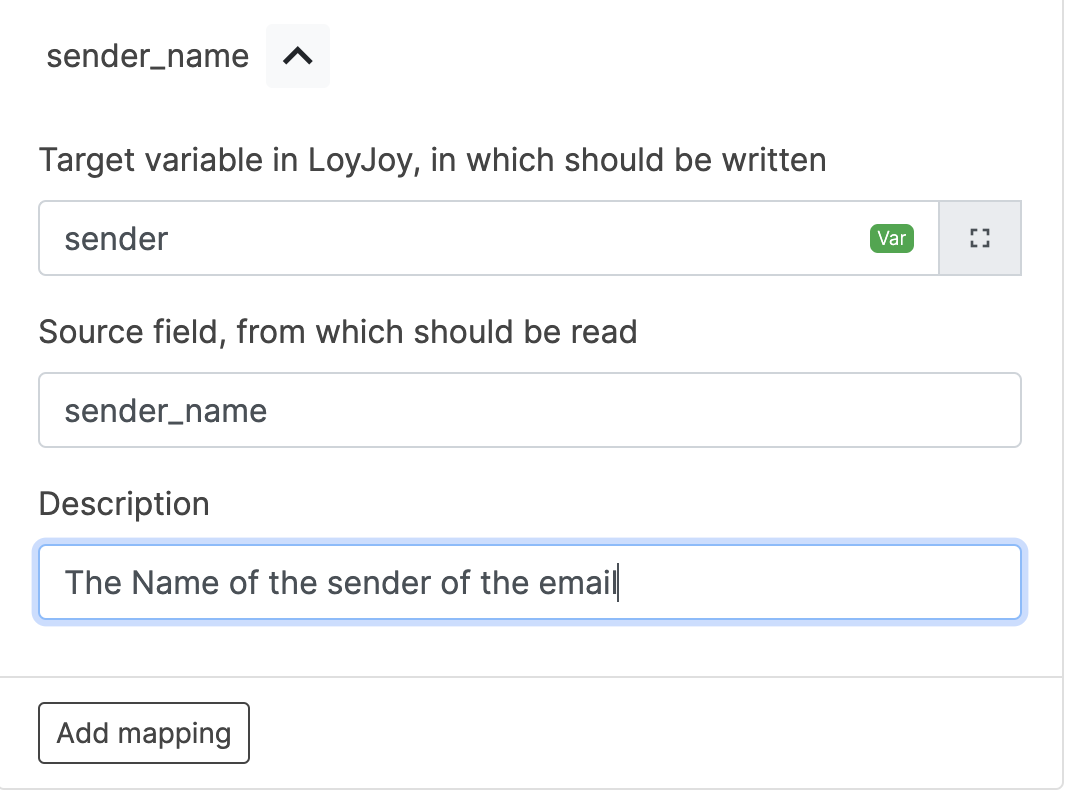

To extract the relevant information you are interested in, create a mapping like this:

The name of the sender is then available in the variable sender. The description of the mapping helps GPT to understand what you are looking for.